JJ (Jeong Joon) Park

jjparkcv (at) umich (dot) edu

I'm an assisant professor at the University of Michigan CSE (Office: Leinweber 3154).

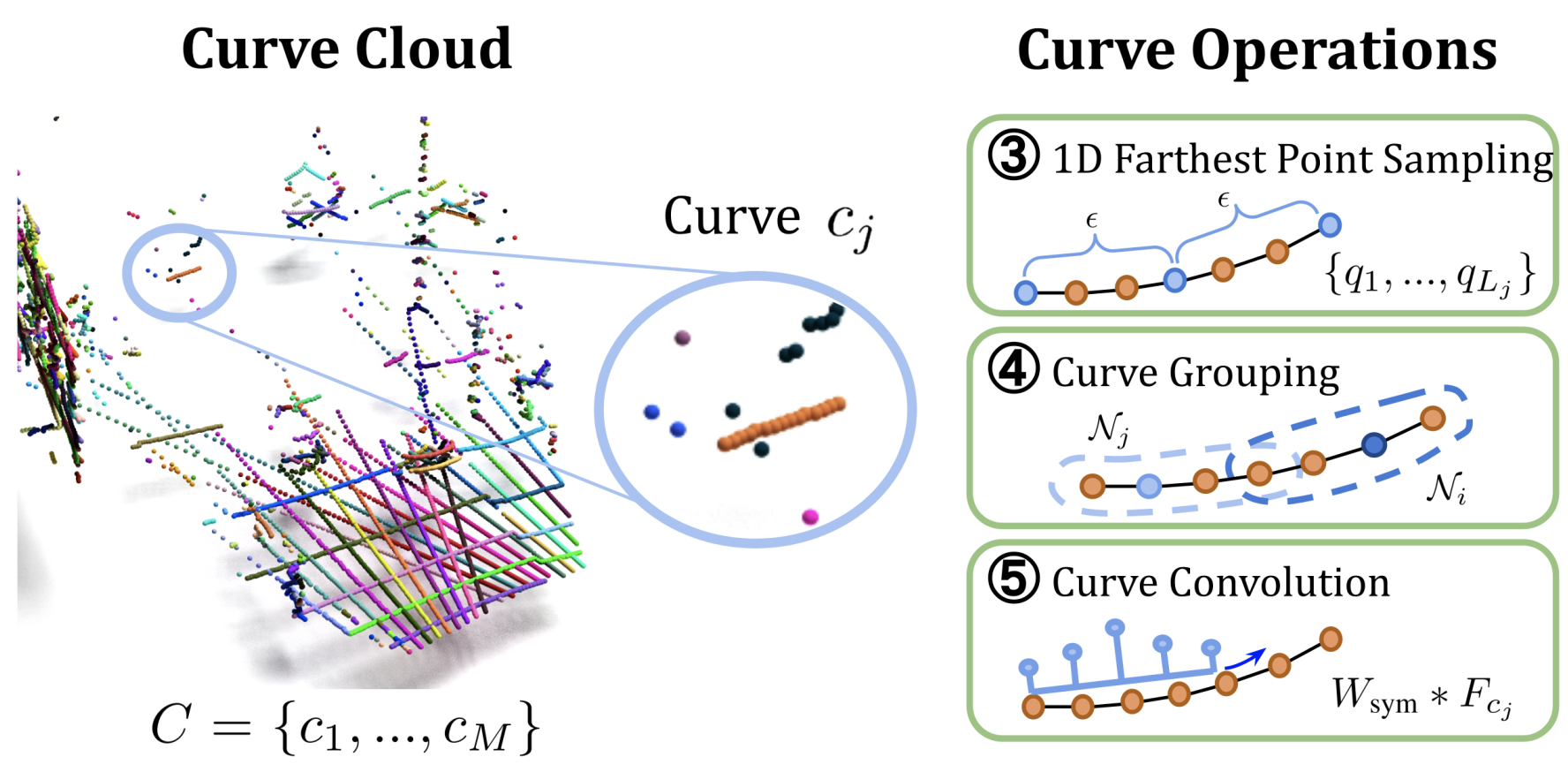

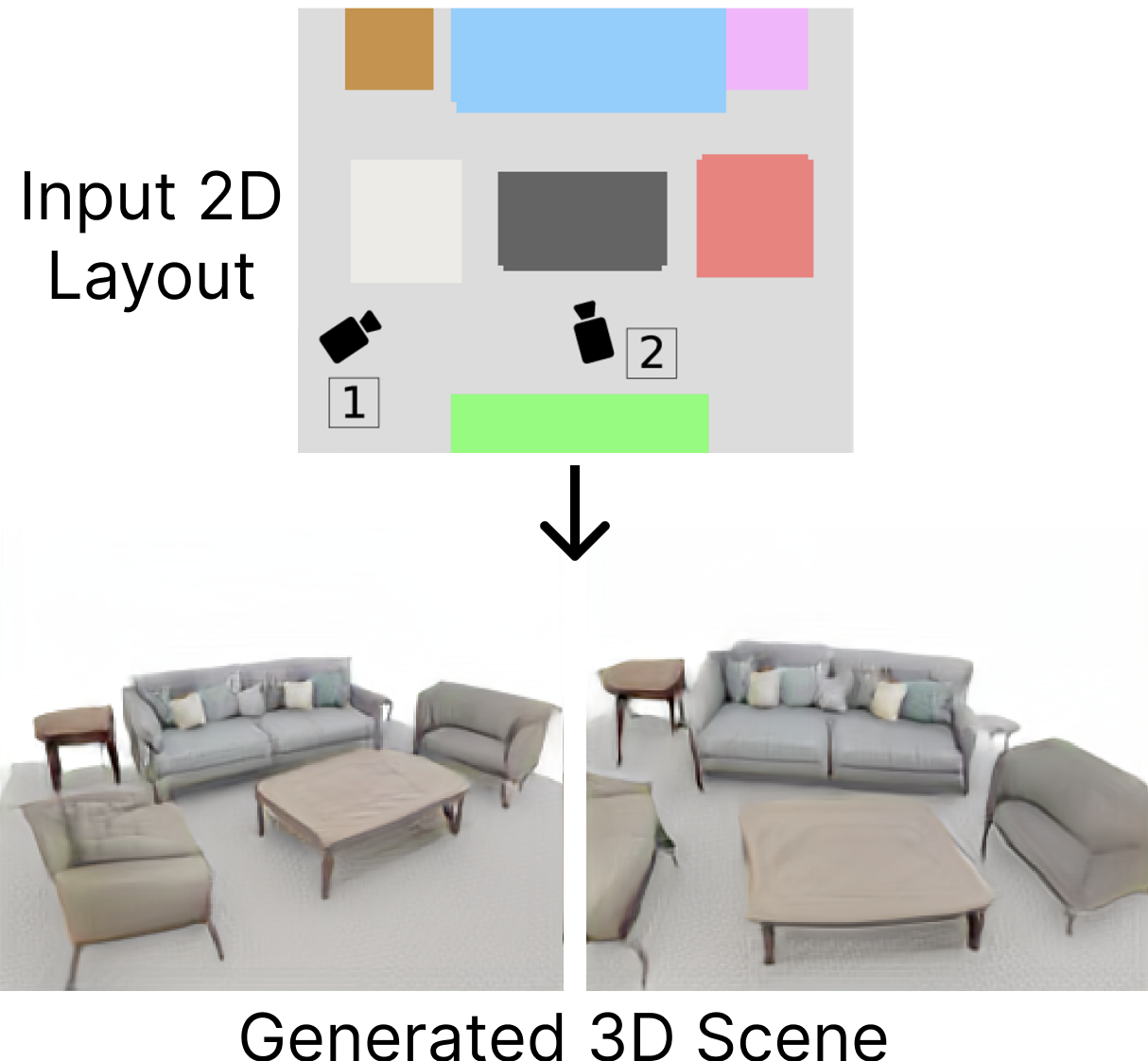

I'm broadly interested in computer vision, graphics, and artificial intelligence. My current research focus is on 3D/4D reconstruction and generative modeling and their applications to robotics, medical imaging, and scientific problems.

I'm looking for students and postdoc applicants!

Please refer to the note below for details. I encourage interested students to apply to the UMichigan CSE PhD program and mention my name in the application.

Prospective Students and Postdocs

I'm looking for PhD and postdoc applicants who have research experience with (but not limited to):- Geometric AI

- Graphics or scientific simulation

- Computer vision (including medical imaging) and generative models

- Robot learning

- Machine learning

- Computational Neuroscience

For UMichigan undergrads and masters' students please send an email with resume -- note that I expect a significant time commitment (>15hrs/week). Unfortunately, I will not be able to respond to all emails.

Current Students

Ang Cao (2020-, co-advised with Justin Johnson)Liam Wang (2024-, NSF GRFP Fellow)

Zichen Wang (2024- )

Lixuan Chen (2024-, Co-advised with Liyue Shen)

Hyelin Nam (2025-)

Yunhao Luo (2025-, Co-advised with Nima Fazeli)

Ling Xiao (2025-, Co-advised with Karthik Duraisamy)